💬 About Me

Hi! My name is Xinyu Yang (杨心妤), a CS PhD student at Cornell University advised by Prof. Jennifer J. Sun. Previously, I got my bachelor’s degree in Computer Science and Technology from Zhejiang University. During my undergraduate, I was fortunate to work with Prof. James Zou at Stanford and Prof. Fei Wu at ZJU.

My research interest lies in Maching Learning and Computer Vision.

📖 Educations

- 2023.08 - Present Ph.D. in CIS, Cornell University, Ithaca, NY, USA

- 2019.09 - 2023.06 B.E. in Computer Science and Technology, Zhejiang University, Hangzhou, China

- Minor: Advanced Honor Class of Engineering Education Program (Honors program of Zhejiang University)

📝 Publications and Preprints

* denotes equal contribution

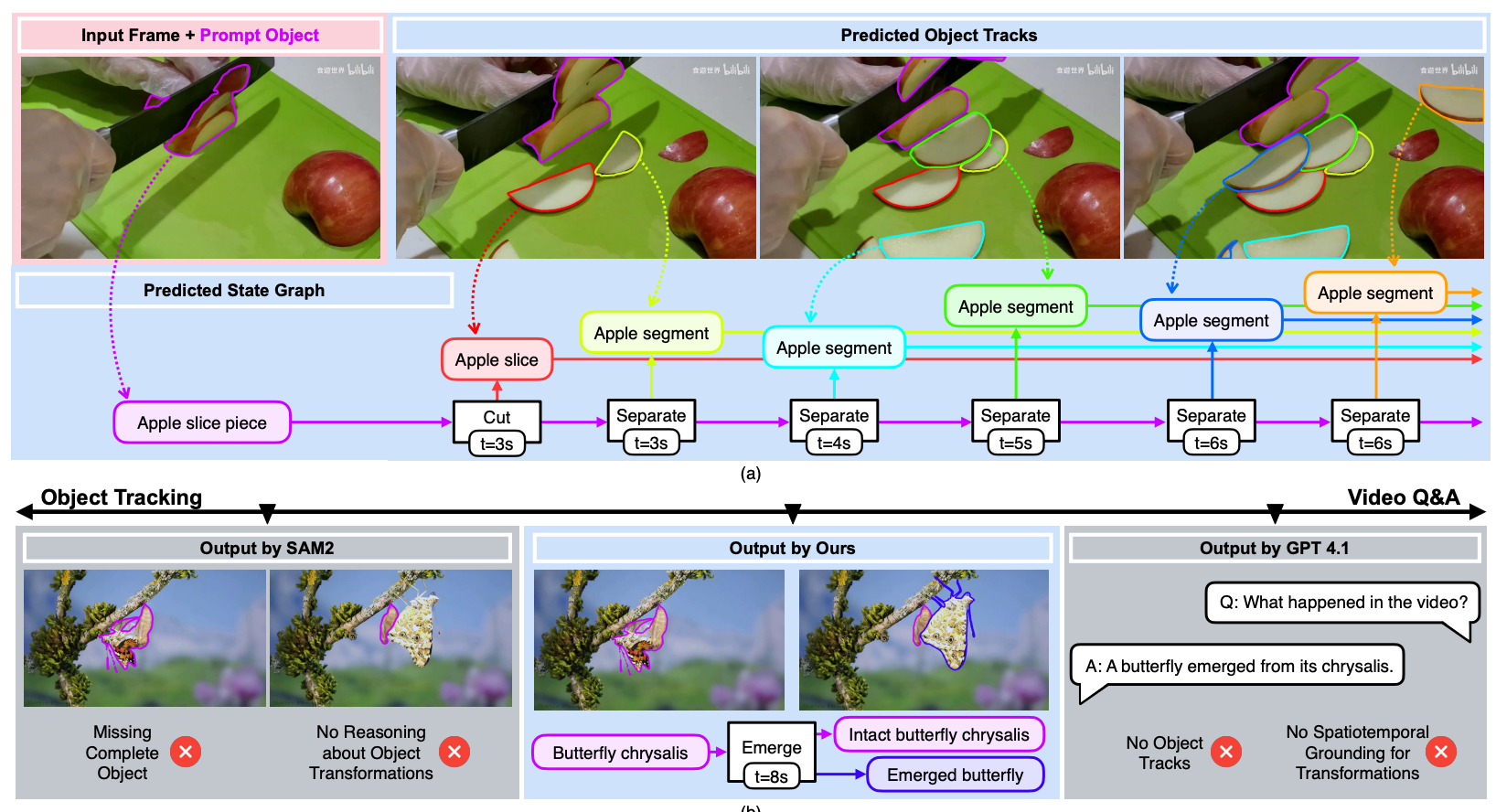

Tracking and Understanding Object Transformations

Yihong Sun, Xinyu Yang, Jennifer J. Sun, Bharath Hariharan

NeurIPS 2025

[PDF], [Code], [Poster], [Data], [Project Page]

We track objects through transformations while detecting and describing these state changes and resulting objects.

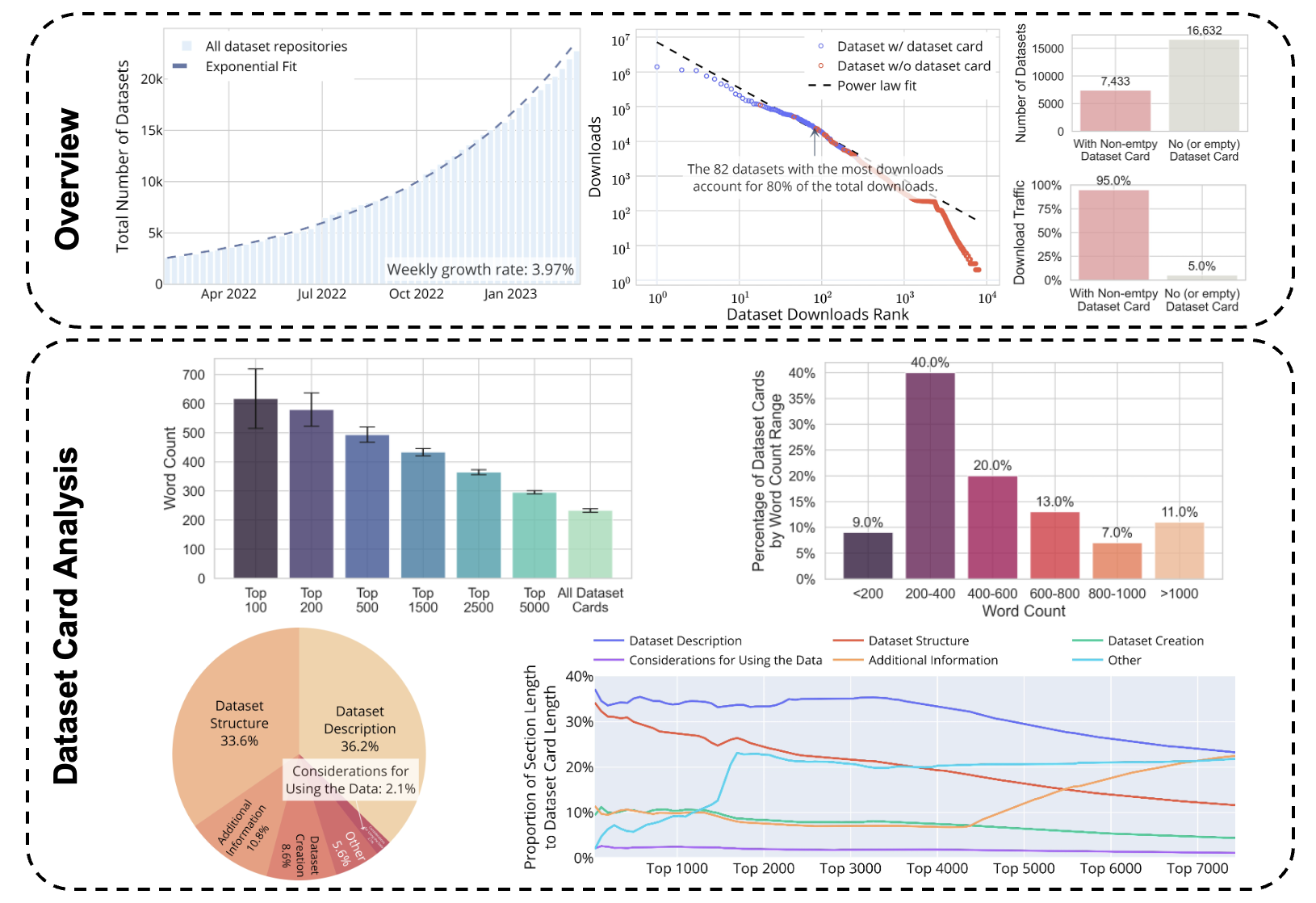

Navigating Dataset Documentation in AI: A Large-Scale Analysis of Dataset Cards on Hugging Face

Xinyu Yang*, Weixin Liang*, James Zou

ICLR 2024

We present a comprehensive large-scale analysis of 7,433 ML dataset documentation on Hugging Face, which provides a empirical analysis of community norms and practices around dataset documentation.

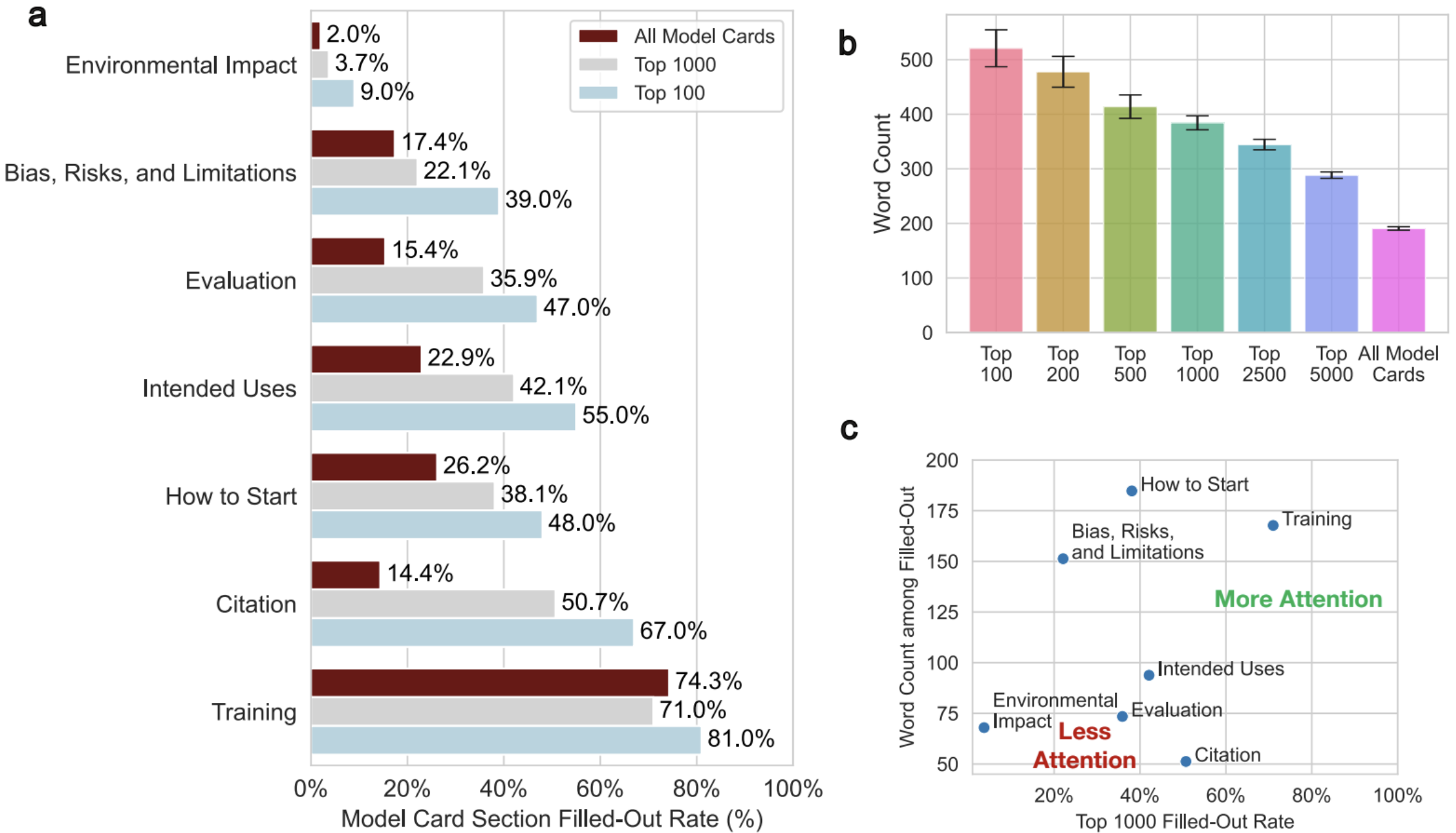

What’s documented in AI? Systematic Analysis of 32K AI Model Cards

Weixin Liang*, Nazneen Rajani*, Xinyu Yang*, Ezinwanne Ozoani, Eric Wu, Yiqun Chen, Daniel Scott Smith, James Zou

Nature Machine Intelligence 2024

We conduct a comprehensive analysis of 32,111 AI model documentations on Hugging Face, which provides a systematic assessment of community norms and practices around model documentation through large-scale data science and linguistic analysis.

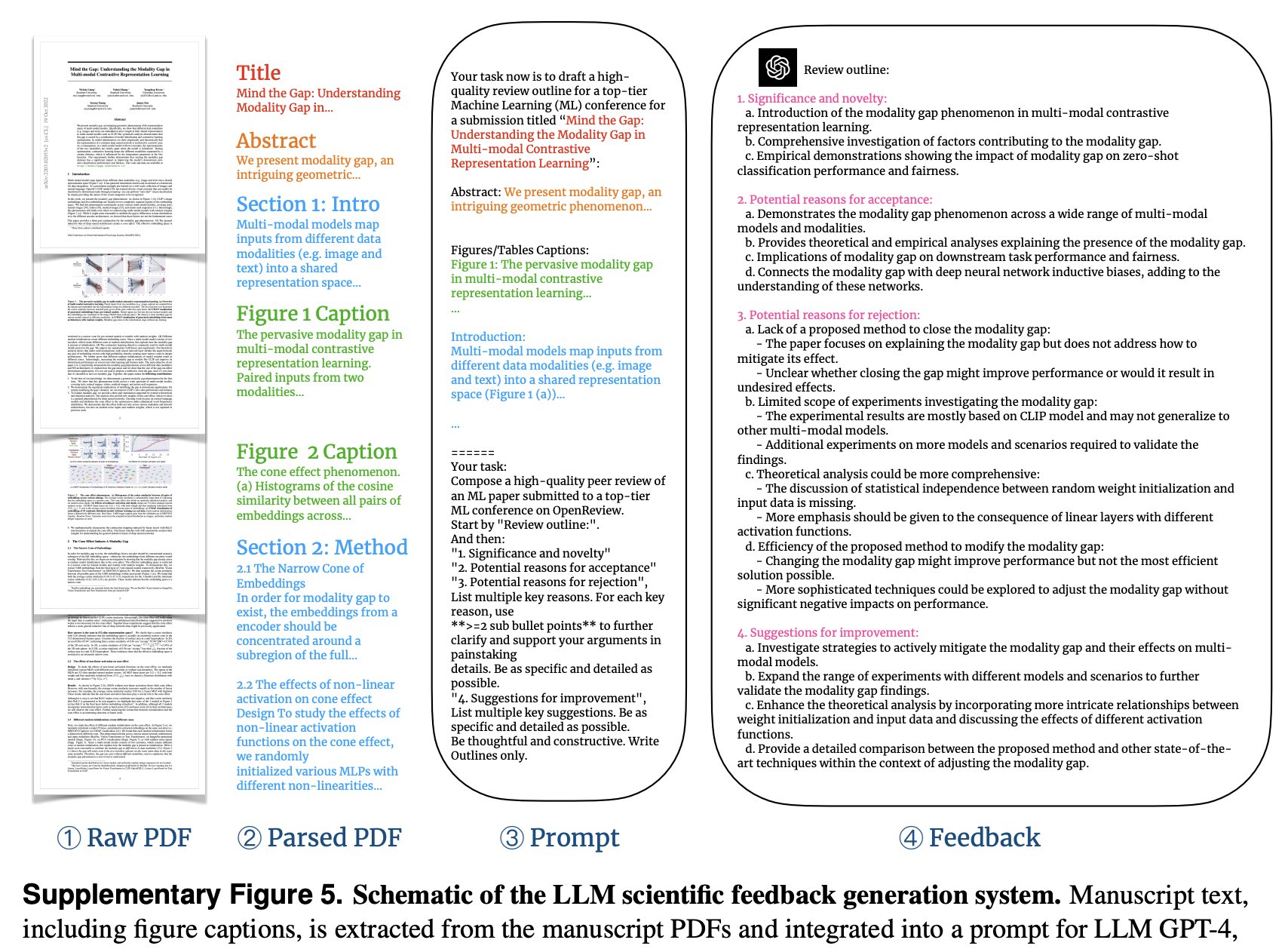

Can large language models provide useful feedback on research papers? A large-scale empirical analysis

Weixin Liang*, Yuhui Zhang*, Hancheng Cao*, Binglu Wang, Daisy Ding, Xinyu Yang, Kailas Vodrahalli, Siyu He, Daniel Smith, Yian Yin, Daniel A. McFarland, James Zou

NEJM AI 2024

We created an automated pipeline using GPT-4 to provide comments on the full PDFs of scientific papers and show that LLM and human feedback can complement each other.

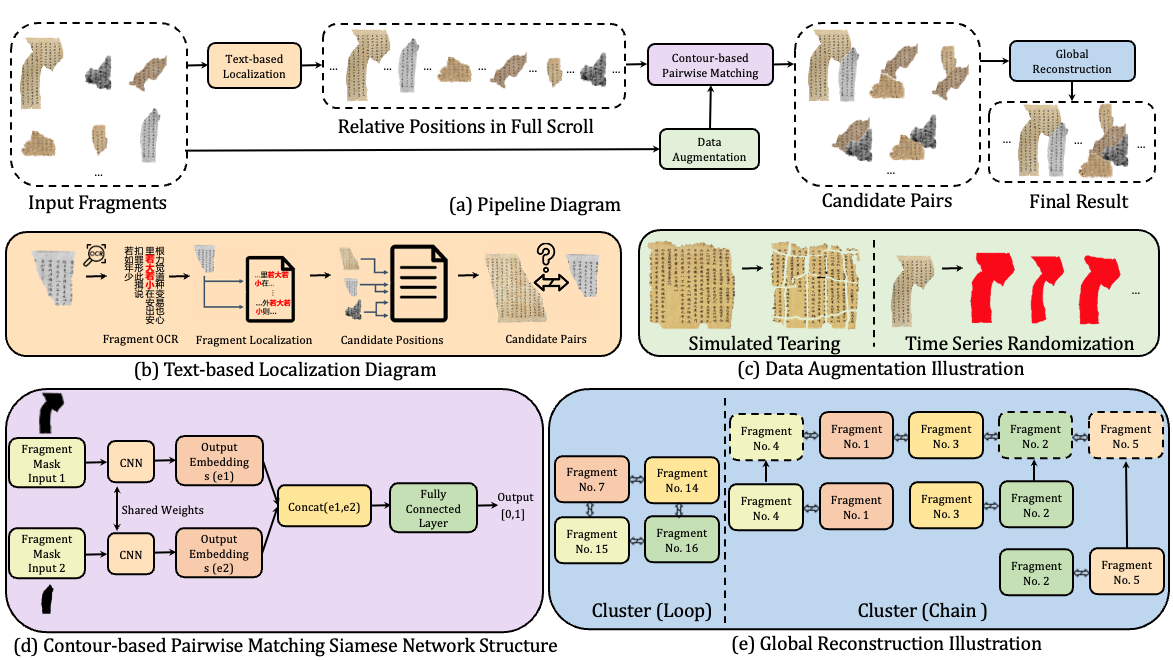

Reconnecting the Broken Civilization: Patchwork Integration of Fragments from Ancient Manuscripts

Yuqing Zhang*, Zhou Fang*, Xinyu Yang*, Shengyu Zhang, Baoyi He, Huaiyong Dou, Junchi Yan, Yongquan Zhang, Fei Wu

ACM MM 2023 (Oral)

We developed a multimodal pipeline for Dunhuang manuscript fragments reconstruction, leveraging text-based localization and a self-supervised contour matching framework, accompanied by a global reconstruction process.

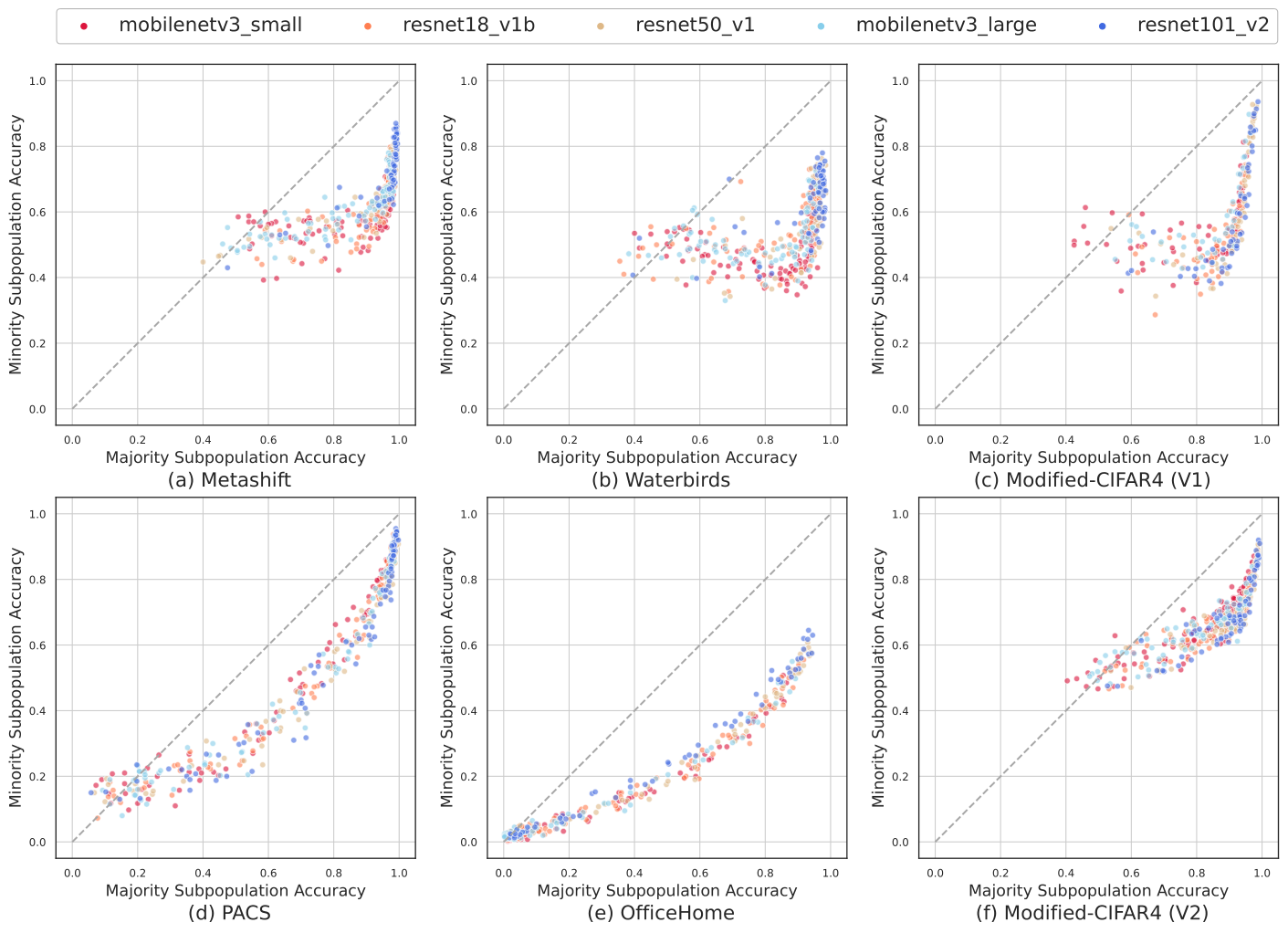

Accuracy on the Curve: On the Nonlinear Correlation of ML Performance Between Data Subpopulation

Weixin Liang*, Yining Mao*, Yongchan Kwon*, Xinyu Yang, James Zou

ICML 2023

[PDF], [Website], [Video], [Code]

We show that there is a “moon shape” correlation (parabolic uptrend curve) between the test performance on the majority subpopulation and the minority subpopulation.

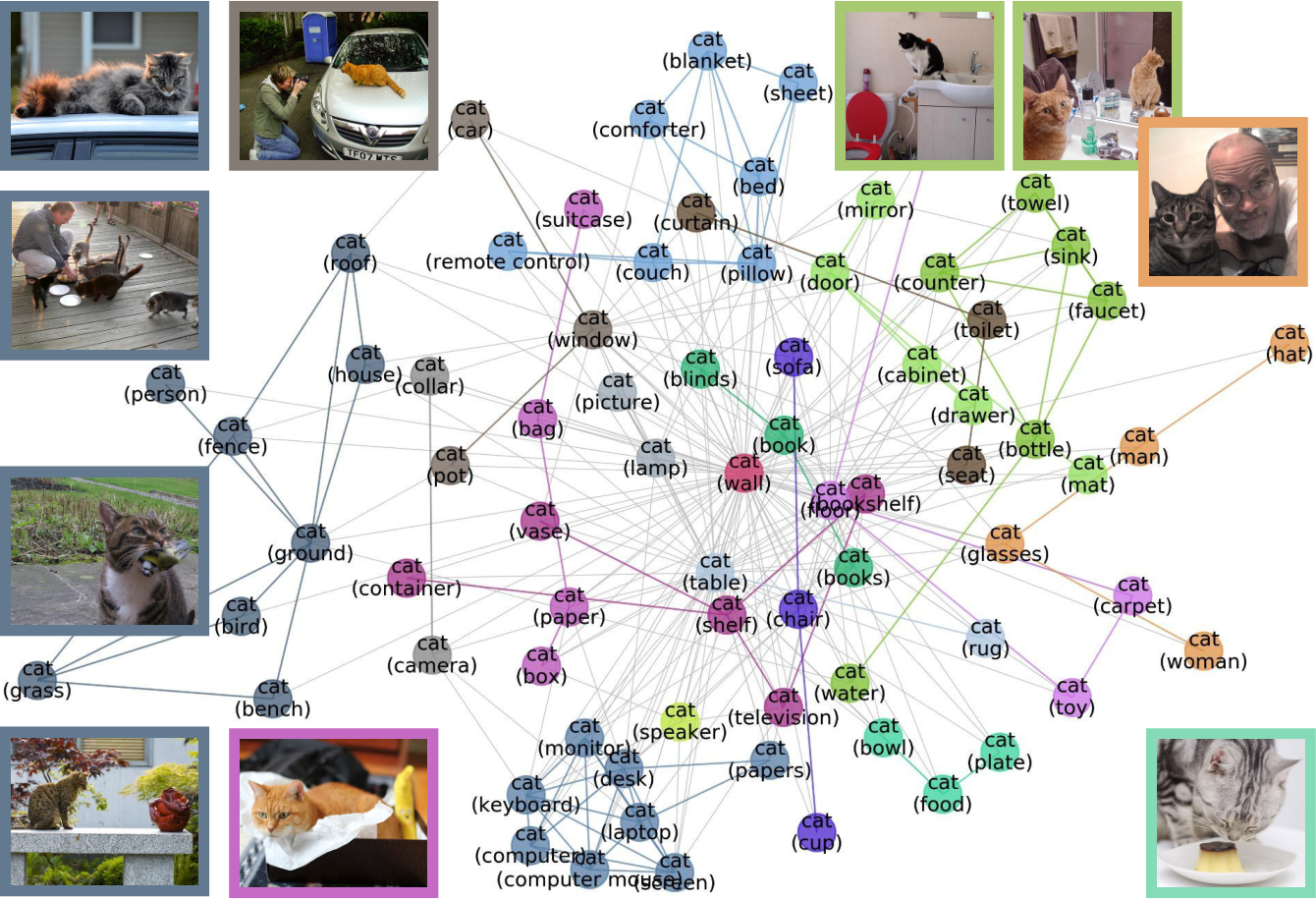

MetaShift: A Dataset of Datasets for Evaluating Contextual Distribution Shifts

Weixin Liang*, Xinyu Yang*, James Zou

Contributed Talk at ICML 2022 Workshop on Shift happens: Crowdsourcing metrics and test datasets beyond ImageNet

[PDF], [Website], [Video], [Code]

We present MetaShift on ImageNet to enable evaluating off-the-shelf ImageNet models on the distribution shift.

🎖 Honors and Awards

- 2022 - 2023 Outstanding Graduates of Zhejiang Province

- 2022 - 2023 Outstanding Graduates of Zhejiang University

- 2021 - 2022 First-Class Scholarship for Outstanding Students of Zhejiang University (Top 3%)

- 2020 - 2021 Second-Class Scholarship for Outstanding Students of Zhejiang University (Top 8%)

- 2019 - 2020 National Scholarship (Top 1%)

- 2019 - 2020 First-Class Scholarship for Outstanding Students of Zhejiang University (Top 3%)

- 2019 - 2020 Outstanding Student Awards in Yunfeng College (15 out of 800 students)

🗒 Services

- Teaching Assistant: Python Programming (Zhejiang University, Spring 2023), Networks (Cornell University, Fall 2024)

- Reviewer: NeurIPS ‘24-‘25, NeurIPS D&B ‘24, ICLR ‘25, ICML ‘25

🎉 Misc

- I started learning Chinese Calligraphy (a traditional form of writing characters from the Chinese language through the use of ink and a brush) and sketchy when I was nine. [Gallery]

- I love art and sports. I enjoy painting, photography, table tennis, piano, and classical music.